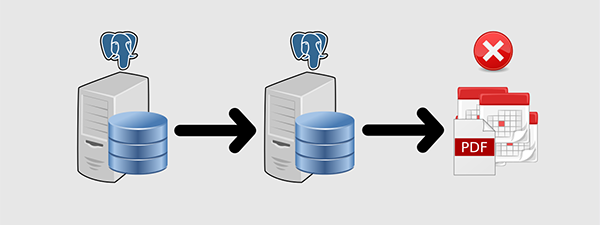

The last month I was working on fixing some issues on a database system I am maintaining. We have a PostgreSQL master instance that is being replicated using streaming replication to a slave instance. As there is a lot of connections on the master server which are using a lot of resources we had an idea to move all the reporting stuff to the slave server. And this is usually the way to do it. Why spend critical resources on the master when you only have to read data and generate reports that are displayed as web pages? So, after a few tweaks to our application we had the first reports from the slave. And everybody was happy. Well, until someone run a slightly bigger report. And 30 seconds later you get an error that looks like this:

ERROR: canceling statement due to conflict with recovery

SQL state: 40001

Detail: User query might have needed to see row versions that must be removed.

Or one of the following flavours:

FATAL: terminating connection due to conflict with recovery

SQL state: 40001

Detail: User was holding a relation lock for too long.

Hint: In a moment you should be able to reconnect to the database and repeat your command.

FATAL: terminating connection due to conflict with recovery

SQL state: 40001

Detail: User query might have needed to see row versions that must be removed.

Hint: In a moment you should be able to reconnect to the database and repeat your command.

So what is going on here? Well, our slave instance has some limitations regarding the queries that you can run on it. On our master new data is coming all the time and some of the existing rows are changed by this new data. When these changed rows are replicated on the slave the queries that run on it might be canceled or even worse, their connection can be terminated. And this is a well known problem, so there are some parameters you can change on the slave to fix this. Or better yet, you can discover that using PostgreSQL you develop superpowers and are now able to stop time [1].

| [1] | Well, you can’t stop time, but you can do almost as good. Read on :) |

Simulation preparations

To demonstrate this problem I will need:

- A PostgreSQL master and a slave connected with streaming replication

- A table with some data

CREATE TABLE a(

id BIGINT NOT NULL,

c TIMESTAMPTZ NOT NULL DEFAULT now()

);

INSERT INTO a

(SELECT generate_series(1, 10000));

- Several consoles/pgAdminIII query windows to run queries on. I will mark the connection on each server with the connection number.

Simulation 1 - basic problem demonstration

We have a table a with 10000 rows of data and we want to change some of it. To do that we can run the following script:

-- master - connection 1 = mc1

WITH random_rows AS (

SELECT floor(random() * 10000) + 1 as id

FROM generate_series(1, 20)

)

UPDATE a

SET c = now()

WHERE id IN (SELECT id FROM random_rows);

SELECT txid_current();

You should run this as a whole so that the last statement can tell you the transaction ID that will be present on the rows that the statement has changed.

On the other server you can run a very intensive reporting query. Like this:

-- slave - connection 1 = sc1

BEGIN TRANSACTION;

SELECT * FROM a;

SELECT pg_sleep(300);

COMMIT;

While the sc1 is running you can run the mc1 query u couple of times, and about 30 seconds after that on the sc1 you will get the error.

So why 30 seconds? Easy, the parameters max_standby_archive_delay and max_standby_streaming_delay have a default value of 30 seconds. And the docs say:

When Hot Standby is active, this parameter determines how long the standby server should wait before canceling standby queries that conflict with about-to-be-applied WAL entries, as described in Section 25.5.2. max_standby_streaming_delay applies when WAL data is being received via streaming replication. The default is 30 seconds. Units are milliseconds if not specified. A value of -1 allows the standby to wait forever for conflicting queries to complete. This parameter can only be set in the postgresql.conf file or on the server command line.

Note that max_standby_streaming_delay is not the same as the maximum length of time a query can run before cancellation; rather it is the maximum total time allowed to apply WAL data once it has been received from the primary server. Thus, if one query has resulted in significant delay, subsequent conflicting queries will have much less grace time until the standby server has caught up again.

So, if I am reading this correctly if I can create a specific order of reporting queries on the slave, I can stop the WAL applying process. As a consequence of that I can stop time on the slave :) Well, having superpowers is great, but I will have to prove that I have them fist.

Simulation 2 - Can I stop time?

So, lets set max_standby_archive_delay and max_standby_streaming_delay to -1 fist and reload the configuration on both servers. To be able to detect the time has stopped we can tun the following query:

-- slave - connection 2 = sc2

SELECT t.id, t.xmin, t.c, now(), clock_timestamp()

FROM a t

WHERE t.c = (SELECT max(c) FROM a)

In the xmin column you can see the transaction ID that created this row, the c column will be the time when the row was created. now() will return the time this transaction started, and clock_timestamp() will return the current time on the server running the query.

So let’s run sc1, then sc2, and after that run mc1 a two times. For example, sc2 will tell you that the last inserted rows were inserted in the XID=714. And mc1 will tell you that ih has created rows in XIDs 715 and 716. If you rerun sc2 you will see the last XID equals 715. Hmmm…. So, we advanced one transaction. I was expecting that we would stop. As a mater of fact, this behaviour is not consistent, and I think id depends on the rows and pages that were changed. Sometimes the XID doesn’t change, but this is very rare. In most of my simulations I got an increment of +1. And the time function tell me the time didn’t stop. I was hoping to get some superpowers, but nothing.

But, it is not that bad. Actually time on the slave goes on, but the data on the slave mimics the situation when the time on the master has stopped.

Can I use this knowledge to create a disaster scenario? Become evil?

Simulation 3 - Breaking bad

Out reporting query lasts only 300 seconds, so it slows down time for only that amount of time. If I can run a similar query before the first one finishes, it should slow time down during its duration. Let’s use the same query:

-- slave - connection 3 = sc3

BEGIN TRANSACTION;

SELECT * FROM a;

SELECT pg_sleep(300);

COMMIT;

You run sc1 and before it finishes run sc3. During this execution you can run mc1 simulating traffic on the master. If you keep running sc2 you will see that after sc1 finishes, XID increases by 1 (or better yet 0). So before sc3 finished, you rerun sc1, and so forth.

We now have 2 reporting queries that are constantly overlapping and causing the slave behave like it is moving very slowly forward in time (or better yet it is frozen in time if you are lucky). This still doesn’t look so bad. No?

Scenario 4 - Denial of service (DOS)

At the end of scenario 3, having the sc1 and sc3 reports constantly overlapping each other, the master crashes and the slave server needs to be promoted. So you issue a promote request (The actual command might vary depending on the linux distribution.):

pg_ctl promote -D /var/lib/postgresql/9.4/main/

And… nothing happens. The slave is not promoted, because WAL replaying is being throttled by the reporting queries. We have no master, and the slave won’t promote until all WAL records are replayed. You can still read data from the slave, but no new data will get in. It sounds pretty bad.

Conclusion

The described scenarios are only theoretical, but with the values of max_standby_archive_delay and max_standby_streaming_delay set to -1, and a steady input of reporting queries, it might just be possible. Also, your reports might be wrong because they are operating on ancient data, and not almost real-time data. And the DOS scenario is really far fetched (but you should really try it at home, not on production).

Setting the hot_standby_feedback = on seems to solve the reporting errors, but not fully. We still got some errors after setting it to on, but I haven’t found a set of example tables/queries to demonstrate that. If I do, I will make a sequel to this post. Untill then, have some sane values for max_standby_archive_delay and max_standby_streaming_delay, let’s say a few minutes so that your longest report will finish. Some will fail, but be prepared for that and you will be ok.